Scott Laliberte and Krissy Safi on privacy, security and the connected smart city

IN BRIEF

- 4:35 – Devices bridging the logical and physical world: “It’s not just the data that we have to worry about, but these devices are able to perform actions that can have implications on human life and safety.”

- 12:20 – Corporate responsibility and data: “A lot of companies want to take that data and figure out how to monetize it and when you're monetizing it, a lot of times that’s when you potentially could be crossing the line.”

- 22:10 – Consumers can drive change: “Consumers have the power. If they stop using companies that aren’t careful with the data or putting products out that aren’t secure or they're putting people at risk, they need to take action.”

Worst-case scenario, best-case-scenario—what exactly are we in for when the Internet of Things takes over our cities’ traffic, power grid and other infrastructure on a mass scale? Can the enormous possibilities outweigh the risks? Two Protiviti experts—Scott Laliberte, Global Leader of Protiviti’s Emerging Technology Group, and Krissy Safi, Managing Director and Global Practice Lead for Attack & Penetration Testing at Protiviti, join Joe Kornik, VISION by Protiviti’s Editor-in-Chief, to analyze some possible scenarios and the important questions we’ll need to answer to avoid the worst of them.

SCOTT LALIBERTE AND KRISSY SAFI ON PRIVACY, SECURITY AND THE CONNECTED SMART CITY - Audio transcript

Joe Kornik: [Music] Welcome to the VISION by Protiviti podcast. I’m Joe Kornik, Director of Brand Publishing and Editor-in-Chief of VISION by Protiviti, our new quarterly content initiative where we put megatrends under the microscope and look far into the future to examine the strategic implications of big topics that will impact the C-suite and executive boardrooms worldwide. In this, our first topic, “The Futures of Cities,” we’re exploring the evolution urban areas are undergoing post-COVID and how those changes will alter cities over the next decade and beyond.

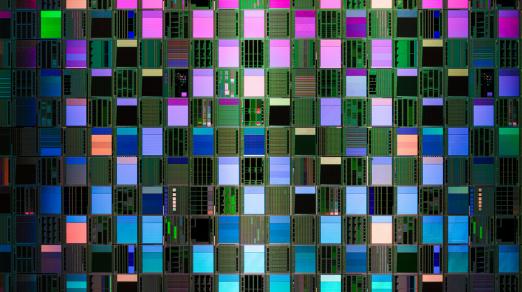

Today, we focus on technology in cities. It’s no surprise that cities all over the world are becoming more digitized: everything from traffic flow and garbage collection to real-time meter reading and autonomous buses and taxis. There are, obviously, lots of big advantages to smart cities as they are commonly called but there’s also a darker side of all this interconnectivity. If hackers are able to penetrate these systems the results could be catastrophic and, doomsday scenarios aside, smart cities are becoming smarter and big data is getting bigger and there are more and more cybersecurity implications to consider, including privacy concerns, data breaches, identity theft, and malware attacks. The pace of all this technology is accelerating at mind-bending speed.

Fortunately, we have two great guests today who will help us sort it all out. Scott Laliberte, Managing Director and Global Leader of Protiviti’s Emerging Technology Group, and Krissy Safi, Managing Director and Global Practice Lead for Attack & Penetration Testing at Protiviti. Scott and Krissy, thanks so much for joining me today.

Krissy Safi: Hi. Thank you for having us.

Scott Laliberte: Yes, glad to be here. Thanks.

Joe Kornik: There are obviously lots of advantages to smart cities as they're commonly called but today, we’re going to discuss some of the potential problems or even dangers that could come from all this interconnectivity. So, curiously, what are risks that should be considered when we talk about smart cities?

Krissy Safi: This is really an important question to be talking through, Joe, because I agree, there are so many advantages and there are some really exciting things to think about with the efficiencies and benefits that these types of advancements will bring. But we do need to think about what are the risks associated with these benefits? What are the potential negative implications? So, looking at it from just purely a digital perspective, the biggest concern in my mind is really around data security and data privacy. So, if you think about all of the data that’s getting collected, processed, stored in order for us to realize the benefits of these innovations, you have to ask, “How could that data be of use and used against us if not managed properly?” For example, could it be used by others to pry into our lives, maybe where we live, what’s our routine, what does our schedule look like, maybe where we shop, what do we spend our money on, where our kids even go to school or maybe even what church do we go to or not go to. Maybe which political events do we attend?

Each of those things alone may sound harmless, inconsequential, but as you piece those individual bits of data together, it tells a pretty complete story of who you are. Okay, what could you do with that? Maybe you could become a target for stalking, could your house get robbed because someone knows so much about your comings and goings and your happenings and your habits. Or even worse, this is a particularly great concern I think to us is, could your actions be taken out of context and could really detrimental conclusions be drawn about you? So, just one basic example, let’s say that you leave the same bar most nights of the week with someone other than your spouse. With that information, someone could conclude that you're drunk and maybe you're having an affair when you really just work at that bar and the coworker walks you out to your car every night to make sure you're safe. So, just one simple example of what could happen when this data is taken out of context.

Scott Laliberte: Krissy, I agree. I think the data side of it is a huge concern and all the additional data that’s provided with smart cities and IoT just makes an existing problem of data security and privacy become even a bigger problem. I think many of us couldn’t even imagine that it could be a bigger problem than it already is today but that’s going to happen. The other part that I think we now need to worry about is smart cities employ IoT, Internet of Things devices, and these devices really are now bridges the logical and physical worlds. It’s not just the data that we have to worry about but these devices are able to perform actions that can have implications on human life and safety. Now, we just not only worry about the data side but we have to worry about the physical safety side of it. There was an example of somebody hacked into a water treatment facility down in Florida a few weeks back and was able to manipulate the treatments such that what should just have been the action of cleaning water to keep everybody healthy became making it dangerous to drink and potentially going to be able to kill or physically harm people. That just happened by hacking into that one water treatment facility.

With a smart city, now you have things like the power, right? The power grid could be affected. Traffic lights that are being run by smart systems that could be manipulated to cause accidents. Transit systems that are being run and managed that could have a bus have an accident, have a train have an accident, all these types of things now happening. If you think about just even some of the common attacks today now being performed in a new way, ransomware. Think about how devastating ransomware is to organizations right now if their data gets locked up and you can't have access to that data, “Oh, no. What are we going to do?” Well, pay the ransom or you're not getting access to your data. How about, “Pay the ransom or we’ll going to kill a whole bunch of people,” right? Or, “Pay the ransom or this action is going to happen and you now are responsible for all the safety of these people and their lives.” So, we now have to think about these implications and how we’re going to secure it with these new technologies that we don’t know a whole lot about, that we’re still figuring out the standards and the controls and how to protect organizations with these devices in those environments. So, we’re now fighting a new battle in a new tech surface in a new tech landscape with bigger stakes in the game and it’s a lot to think about. I mean, we really have to go into it with that view in mind and really looking at the way we assess and put the controls in place so that we’re ready to defend not just the data but the lives and human safety as well.

Krissy Safi: Yes. I mean and if you think about it too, all these devices are quickly coming to market. I mean, how do you help the developers, the companies building these products to ensure that security was built-in intentionally while not missing out on being the first to market. That’s one of the things that concerns me the most.

Joe Kornik: Yes. It’s really interesting. Scott, you pointed out or you started to take us down the path of some potential doomsday scenarios, talking about ransomware and whatnot. I know that with all this data, it could lead as you pointed out to something much more nefarious, hacking or something worse. What are some of those doomsday scenarios, if I could for a minute just sort of take us down that road a little further than maybe you just did? If we’re not focused on cybersecurity, what are some of the really bad outcomes that we could be looking at?

Scott Laliberte: I'm probably the wrong guy to ask because I'm always thinking about the worst things that can happen, the balance of the risk and reward. So, the doomsday scenarios are pretty significant. The doomsday scenarios are ones that I think really are the ones that lead to the loss of human life and those are the ones that really scare me, wide scale, right? So, like one traffic light being manipulated and there’s an accident. I mean that’s unfortunate but, it’s a travesty but you have that happen on a mass scale so every single traffic light in the city is manipulated in such a way it causes accidents every single intersection all the same time. Not only do you have mass casualties occurring at that point in time, first response systems are going to be overwhelmed. There’s no way you're going to be able to respond with that type of broad scale accidents in a timely and concise manner. So, those are the types of scenarios I think really scare me. Like ones that are mass attacks against a safety type system, it causes some type of accident for human life.

I had an experience early in my career. I won't say how many years ago it was but many, many years ago back when I was hands-on keyboard pen tester. One of my first pen tests was for a chemical-type company and I'm sitting in there, hacking away and got admin really quickly and got into some system that I didn’t know what it was. Because it was a whole green screen system at that time and I was sitting there trying to figure out what to do and doing manual commands to figure out what was available to me. Luckily, somebody from the point of contact from the client walked by and he’s like, “Stop, take your hands off the keyboard. You are at a pressure control system and you can blow the whole place up.” So, that very early in my career, gave me that appreciation of the doomsday type scenarios that can occur and how those logical and physical worlds can come, married together in a very bad way very quickly.

Joe Kornik: Scary stuff. Earlier, we discussed the sort of cameras on every corner and sort of all this data that’s out there and a big part of IoT in smart cities are those mechanisms, which can be very helpful but problematic as well. Last year, the mayor of San Diego ordered the city’s smart street lights turned off after the ACLU and other groups cited privacy concerns. So, this opens a whole Pandora’s box, I think. When it comes to privacy and data collection and surveillance, I mean where’s the line? It feels like we’re sort of approaching that line or maybe we already crossed it but when does safety become surveillance, I guess is my question.

Scott Laliberte: Yes, Joe. It’s such a tricky area that requires balance because we can have tremendous benefits to safety. I talked about all the bad things that can happen in this regard but there’s also so many good things that can happen and it comes with trade-offs, right? For instance, using the IoT and the AI machine learning that can come with it to be able to alert people to safety issues. Like there’s a safety concession in this part of the facility and you get an alert on your phone to avoid that area or it's able to detect a dangerous person in the crowd because computer vision can see that the person has a weapon or they're acting in a manner that would be consistent with somebody harming people. I already see that the person is harming people, you alert the authorities to take appropriate action. So, there’s so many ways that this technology could be used in a positive manner but then as you mentioned, you flip that, right? So, what if the action of that person isn't actually acting dangerous, it’s just perceived that way or it’s biased based upon characteristics that its models are using and learning and inappropriately identifying people as potentially dangerous. So, you have that kind of bias angle to it as well on top of the whole part about surveillance or the example of you're monitoring somebody going and all of a sudden you think they're having an affair instead of being escorted to their car for safety purpose. It’s an area I think regulation is going to have to really step in and lay down appropriate guidelines, and organizations are going to have to really be responsible in the way that they use that data and the way they're doing things.

I think that necessarily sometimes conflicts with the organization’s desire to be profitable and make revenue. Like a lot of companies want to take that data and figure out how do they monetize it and when you're monetizing it, a lot of times that’s when you potentially could be crossing the line of using it in ways that’re not intended to and violating people’s privacy and using it for things that it shouldn’t be. So, I think we’ve got to be very careful. Corporations and companies need to be responsible in a way that they use this. I think there’s got to be guidelines on what is appropriate as far as response and cooperation with authorities. I think the courts are going to have to also put forth good guidance as well of having to balance the constitutional rights of citizens with the need to protect that safety of others. It’s going to be a very tricky area that I think we’ll see cases kind of evolve over time but the keys to it, I think, are having good policies for the organization to follow. It’s well thought out and there’s good governance function over it and we get guidance put out by the government and other regulatory bodies as well as industry leading groups, and then the cooperation between government and corporations.

Krissy Safi: Yes. I want to go back to your questions, Joe, kind of around when does safety become surveillance, and Scott talked about there are certainly many benefits to those things but do we think that surveillance could drive safety? Scott had several examples of that and as I was digging into this case a little bit more, what happened in San Diego, there are a lot of opinion pieces out there saying, “People who are law-abiding citizens, they should be perfectly okay with the surveillance.” It's kind of one of those old adages that we even hear from like a government perspective. If you're following the law, you should be perfectly okay with that kind of surveillance and it just means that your family is going to be safer. They're catching the criminals, they're more likely to be caught because there’s now video footage or maybe they're going to be deterred because they know there’s cameras around, things like that. But when does it really truly becomes surveillance? It kind of goes back to, then, what is being done with that data? Who has access to that data? What is the retention period around that data? How is it used? Is it somebody is just looking through the data to find something that might be suspicious or is it used to investigate an event that happened as evidence of that particular thing?

So, I think it comes a lot down to that and transparency too. So, transparency for the citizens. That was a big case in San Diego, the citizens there felt like they were not informed thus the city was not being transparent about how the data, how the footage from those cameras was being used after the objective, the initial objective, of those cameras was changed. So, I think kind of going forward, adding on to what Scott is saying, is we need to think about, what are we looking like in the future. What kind of policies do we need to have in place? I think transparency is going to be a big part of that and standard regulations and rules about how that data is used and shared and secured, and retention policies, things like that.

Joe Kornik: Right. I think a safe way forward is, if you're outside in a public place, especially a city, you can probably expect that there’s a camera or maybe several cameras. I said outside, I hope it’s only outside. I guess inside as well, there’s potential that you're being viewed in ways you don’t realize. But I think it’s safe to assume that you're probably being recorded at almost any point in time. I think cities are—I think they're on the tough spot there. So, I'm curious your thoughts on public officials and cities or private companies. I mean obviously, a lot of these cameras are security cameras that are attached to private buildings and private spaces as well. I mean what steps should cities or private companies be taking right now to ensure that they're not going to be the target of a cyberattack?

Scott Laliberte: Companies got to realize it’s not, will they be a target? They are going to be a target and they need to be prepared for it. There’s no question, right? Everybody is pretty much a target today and as you put more smart technology, more IoT, you're increasing your attack surface. So, you're opening yourself up to be attacked in more ways. So, that has to be accepted. You just have to understand that you're in that situation. What they really need to do is perform a risk assessment and that’s a practice that’s common today, of looking at the risks and assessing the risks and asking the scenarios of what could go wrong and what needs to be protected. Those need to be reperformed and updated given the new technology, a new attack surface that exists with the smart technologies that are in place. Then you're really kind of focusing on those key areas. Where can you put the controls that have the most benefit? What are the highest risks that you need to address because you're going to have to prioritize? There’s going to be so much to protect. You're going to have to prioritize how you implement those protections and then really have a comprehensive program that implements procedures to identify issues, identify both the threats as well as the assets that need to be protected. Put in controls to prevent those attacks from being successful. Putting controls and mechanisms to detect the attacks when they are occurring so you can respond accordingly. Then you have to think about recovery, right? How are you going to recover?

If those five steps sound familiar to many people, that’s because they're the key steps in this CSF, cybersecurity framework, and they apply in technologies including IoT and smart technologies as well. But having those five areas is very important, you’ve got to have the layers of defense. The other thing is making sure that you build the controls and procedures, the designs and plans of smart cities. So, if you're in those early stages right now where people are deploying pieces or parts of the smart city or different technologies, it’s very critical that they think through the security and privacy implications in those designs now because as we all know, trying to go back and retrofit any of those types of controls into a design becomes more expensive and more difficult the further down the road you get with the deployment.

The other thing is making sure you're constantly revisiting those plans to make sure that they're up to date. The technology is changing so quickly. I mean like the technology that was in the forefront six months ago has changed from six and a half months ago, 12 months ago. The attacks that we’re seeing today from six months ago have changed. So, you constantly have to be relooking at your plans and your infrastructure and your assets to know, “What are the new attacks, what are the new controls, what are the latest updates I need to be applying to make sure that I am trying to keep them safe?”

Another aspect is skills, right? Meaning to recognize that the skills needed to secure and manage IoT and smart technology are different than that of your traditional IT infrastructure. The communications are different, some of the protocols are different. The operating systems and the firmware that they run are different. So, thinking that you're going to get a traditional server admin that can go in and secure and deploy IoT is going to be an unfair expectation to that person. So, you need to make sure that you're employing appropriately skilled resources either in-house or through partnerships and make sure that they're involved in the planning and protection of that infrastructure. Make sure you're accounting for life cycle management updates and then also we have to think about the cross-border implications and the broader implications because a lot of the jurisdictions have different requirements and a lot of their technologies involve people within jurisdictions, customers from different jurisdictions. So, until we get consistency in the laws and standards, you have to realize how those cross-border standards and laws pertain to you and how you’re going to be able to comply with them.

Krissy Safi: I’ll have one other thing to add to that, actually. I’ve seen our clients also going back to the vendors or the developers of these smart city products and asking questions around how did they build security into the product. Answering many of the questions that you said, what is their life cycle update process, their security update process. All of those kinds of things that actually, sometimes doing a “bake off” between competitive products to see who is responding to the security findings, the quickest store or who has less impactful or more impactful findings, things like that. So, really kind of taking it to the next level and holding our manufacturers of smarter city products, holding them accountable for the security too and making it a purchasing decision.

Joe Kornik: So, I just have one more question for the two of you. You have been very, very helpful for a fascinating conversation around cybersecurity and some of the darker recesses of potential hazards. I want to look a little bit forward. I mean, this is the future of cities that we’re talking about here and smart cities are only going to get smarter and big data is only going to get bigger. So, what do you think will sort of end up in terms of cybersecurity and privacy as it pertains to smart cities in say 2030 or even beyond that? I mean look as far out to the future as your mind can take us.

Krissy Safi: And yet so true. Smart city technology and the proliferation of the data that’s associated with that is only going to grow. What I find interesting too and some of the opinion pieces and just, generally, looking at different generations of consumers is, consumers are generally okay with the collection and usage of their data. They click okay to the agreements really without reading through. They just click through, click through, “What’s going to make my life the easiest,” and they expect that their data is going to be collected and shared, but going back to where do we need to go though, I think really comes down to that transparency, making sure that it is transparent to our consumers that the data is being collected, what it’s going to be used for and then having the governance and oversight of how that data is collected, shared, and retained. The personal security ramifications that we mentioned earlier could be significant. So, having the basics of how is that data collected, shared, retained, and policies and worrying about the cross-border implications and are we streamlined, things like that.

Scott Laliberte: Krissy, your point around consumers being able to drive change is going to be very important. Hopefully by then, we’ll have some type of balance and consistency between the different jurisdictions, the state laws, the federal laws, international laws. But given what we’ve seen in the past, I'm hopeful but also skeptical at the same time. I think consumers have to realize, as Krissy said, that they too can drive change and because they have these companies in the pocketbook, right? Like if they stop using products or stop using companies because of the questionable practices that they have with the use of their data or putting products out that aren’t secure and they're putting people at risk. They need to take action and I think we’ve seen the publicity of some of the questionable actions of big tech but I don’t think we’ve necessarily seen the consumers react to that in such a way that those companies felt enough pain in order to promote change. With social media and the ability for people to organize very quickly and have actions when it just looked like how the stock market was able to be manipulated with social media, those types of things being applied to trying to drive the behavior of manufacturers and companies that implement this technology, I think that could be a very powerful tool. I balance that with something like a DuckDuckGo browser that does take actions to protect your safety. How many people use that versus some of the other more traditional browsers that openly tell you they're going to sell and use your data, right? Until we, as consumers, start to embrace, “You know what, maybe I need to give up a little bit of functionality or a little bit of ease for a little bit of greater privacy and protection.” I fear that the change will be slow and, hopefully, we’ll see that evolution continue and the consumer will drive it.

One of the thing I will say just about 2030 is the technology landscape is going to continue to evolve. It's going to be different and we’ll have some new challenges that we have to deal with, right? Quantum computing is evolving very quickly. It’s going to really radically change the way computing occurs today. It has use cases that we’ll be able to resolve that we can’t even think about solving today. That’s going to open up great safety, potential possibilities but also open up new threats that we’ll need to think about and there are others beyond that. So, we’re just going to have to stay on our toes and really keep applying those same risk assessments and counter balance of benefit versus controls as we go forward.

Joe Kornik: There’s no playbook for this, right? Thinking nine years in the future is hard enough when we’re thinking in the non-big data world, right? I mean, how quickly that exponentially increases the amount of data in the world and how that can be used and the technology advances faster than we can keep up with. But that’s why we have you, Scott and Krissy, to help us sort of go out and to keep us on the straight and narrow as we move forward. So, thank you for a fascinating discussion. The Age of the Jetsons I think is coming quicker than we might be ready for and like I said there’s no playbook for it. We’re just going to have to figure out as we go and thanks again for your time today and, again, helping us sort through some of these issues today.

Krissy Safi: Thank you.

Scott Laliberte: Thank you. Thanks for having us.<>Krissy Safi: Yes, thank you.<>Joe Kornik: Thanks for listening to the VISION by Protiviti podcast. Please rate and subscribe wherever you listen the podcasts and visit us at vision.protiviti.com.

Scott Laliberte is the Global Leader of Protiviti’s Emerging Technology Group. Scott and his team enable clients to leverage emerging technologies and methodologies to innovate, while helping organizations transform and succeed by focusing on business value and managing risk. His team specializes in many technological areas, including artificial intelligence and machine learning, internet of things, cloud, blockchain, and quantum computing. In previous roles at Protiviti, Scott was the global leader of Protiviti's Cyber Security Practice and Attack and Penetration Labs.

Krissy Safi is Managing Director and Global Practice Lead for Attack & Penetration Testing at Protiviti. An ethical hacker turned business leader, Krissy is a creative thinker with an entrepreneurial spirit driving the development of multi-million dollar security practices for both the private and public sector. Krissy has nearly two decades of information security experience across all domains of security in support of Fortune 500 companies and government agencies throughout numerous international locations.